01. What is Kubernetes? Write in your own words and why do we call it K8s?

Kubernetes, also known as “K8s” for short, is an open-source container orchestration platform that helps manage and automate the deployment, scaling, and management of containerized applications.

In simpler terms, Kubernetes is a tool that helps developers deploy and manage their applications in a way that is efficient, reliable, and scalable. It allows them to package their applications and dependencies into containers, which can then be easily moved between different environments such as development, testing, and production. Kubernetes then manages the deployment of these containers, ensuring they are running and healthy, and scales them up or down based on demand.

The name “Kubernetes” comes from the Greek word for “helmsman” or “pilot”, reflecting its role in steering and managing the deployment of containers.

The term “K8s” is derived from the word “Kubernetes”, where “8” represents the number of characters between the “K” and the “s”. This shorthand notation is commonly used in technical circles as a way of abbreviating long words or phrases.

02. What are the benefits of using K8s?

There are many benefits of using Kubernetes (K8s) to manage containerized applications. Here are some examples:

Scalability: Kubernetes makes it easy to scale your applications up or down based on demand. It can automatically spin up additional instances of your application to handle increased traffic, and then scale them down again when demand decreases. This allows you to ensure that your application is always available and responsive to user requests.

Fault tolerance: Kubernetes is designed to be fault-tolerant, meaning it can automatically detect and recover from failures. For example, if one of your application instances crashes, Kubernetes can spin up a new instance to replace it without any interruption in service.

Portability: Kubernetes is platform-agnostic, meaning it can run on any infrastructure, whether it be on-premises or in the cloud. This allows you to easily move your applications between different environments without having to worry about compatibility issues.

Resource utilization: Kubernetes optimizes resource utilization by ensuring that each application instance only uses the resources it needs. It can also automatically distribute workloads across multiple nodes in a cluster, making the most efficient use of available resources.

Automation: Kubernetes automates many of the tasks involved in managing containerized applications, such as deployment, scaling, and health monitoring. This can help reduce the workload for developers and operations teams, allowing them to focus on other tasks.

Community support: Kubernetes has a large and active community of developers and users who contribute to its ongoing development and support. This means that you can benefit from a wealth of knowledge, resources, and best practices when using Kubernetes.

These are just a few examples of the many benefits of using Kubernetes. Overall, Kubernetes can help make it easier and more efficient to manage and scale containerized applications, allowing you to focus on delivering value to your users.

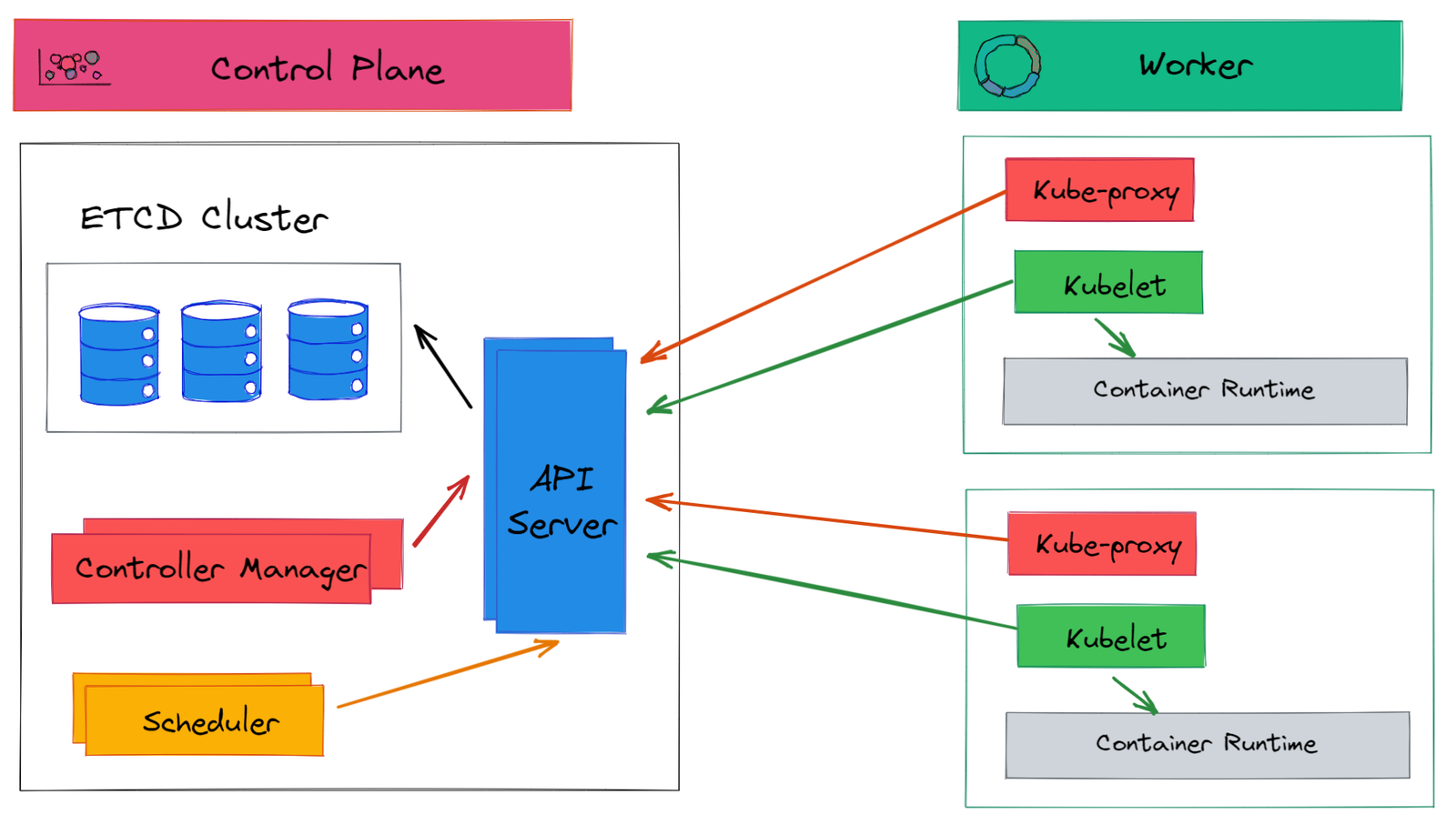

03. Explain the architecture of Kubernetes

A Kubernetes cluster is a set of physical or virtual machines and other infrastructure resources that are needed to run your containerized applications. Each machine in a Kubernetes cluster is called a node. There are two types of nodes in each Kubernetes cluster:

Master Node(s): hosts the Kubernetes control plane components and manages the cluster

Worker node(s): runs your containerized applications

4. Kubernetes Master / Control Plane:

• The Master node is also called as Control Plane. It is the set of components for managing the state of the Kubernetes cluster.

• Master is responsible for managing the complete cluster.

• You can access the master node via the CLI, GUI, or API

• The master watches over the nodes in the cluster and is responsible for the actual orchestration of containers on the worker nodes

• For achieving fault tolerance, there can be more than one master node in the cluster.

• It is the access point from which administrators and other users interact with the cluster to manage the scheduling and deployment of containers.

• It has four components: ETCD, Scheduler, Controller and API Server

Master / Control Plane Components:

ETCD

• ETCD is a distributed reliable key-value store used by Kubernetes to store all data used to manage the cluster.

• When you have multiple nodes and multiple masters in your cluster, etcd stores all that information on all the nodes in the cluster in a distributed manner.

• ETCD is responsible for implementing locks within the cluster to ensure there are no conflicts between the Masters Scheduler

• The scheduler is responsible for distributing work or containers across multiple nodes.

• It looks for newly created containers and assigns them to Nodes.

Scheduler

• The scheduler is responsible for distributing work or containers across multiple nodes.

• It looks for newly created containers and assigns them to Nodes.

API server manager

• Masters communicate with the rest of the cluster through the kube-apiserver, the main access point to the control plane.

• It validates and executes the user’s REST commands

• kube-apiserver also makes sure that configurations in etcd match with configurations of containers deployed in the cluster.

Controller manager

• The controllers are the brain behind orchestration.

• They are responsible for noticing and responding when nodes, containers or endpoints go down. The controllers make decisions to bring up new containers in such cases.

• The kube-controller-manager runs control loops that manage the state of the cluster by checking if the required deployments, replicas, and nodes are running in the cluster

Kubectl

• kubectl is the command line utility using which we can interact with k8s cluster

• Uses APIs provided by API server to interact.

• Also known as the kube command line tool or kubectl or kube control.

• Used to deploy and manage applications on a Kubernetes

Kubernetes Worker Components:

Kubelet

• Worker nodes have the kubelet agent that is responsible for interacting with the master to provide health information about the worker node

• To carry out actions requested by the master on the worker nodes.

Kube proxy

• The kube-proxy is responsible for ensuring network traffic is routed properly to internal and external services as required and is based on the rules defined by network policies in kube-controller-manager and other custom controllers.

5.Write the difference between kubectl and kubelets?

kubectl and kubelet are two important components in the Kubernetes architecture, but they serve different purposes. Here are the key differences between kubectl and kubelet:

Functionality: kubectl is a command-line tool used to manage Kubernetes clusters. It allows developers and operations teams to create, inspect, and modify Kubernetes objects such as pods, services, and deployments. On the other hand, kubelet is a node agent that runs on each node in the Kubernetes cluster. It is responsible for managing the state of the individual nodes in the cluster and ensuring that containers are running and healthy on the node.

Role in the cluster: kubectl runs on a client machine and interacts with the Kubernetes API server to manage the cluster. It can be used to manage any part of the cluster from anywhere, as long as the user has the appropriate credentials and permissions. On the other hand, kubelet runs on each node in the cluster and is responsible for managing the containers running on that node. It communicates with the API server to receive instructions on which containers to run and how to manage them.

User interface: kubectl provides a command-line interface for interacting with the Kubernetes API server. It supports a wide range of commands and options for managing different aspects of the cluster. On the other hand, kubelet does not provide a user interface. It runs as a background process on each node in the cluster and communicates with the API server through the Kubernetes API.

In summary, kubectl and kubelet are both important components in the Kubernetes architecture, but they serve different purposes. kubectl is used to manage the cluster from a client machine, while kubelet is used to manage the containers running on each node in the cluster.

06. Explain the role of the API server?

The API server is a core component of the Kubernetes Control Plane that provides a central hub for managing the state of the Kubernetes cluster. It is responsible for exposing the Kubernetes API, which is a RESTful API that can be used to manage and monitor the Kubernetes cluster.

The API server acts as the front end for the Kubernetes Control Plane, receiving requests from various clients such as kubectl, kubelet, and other controllers. It validates these requests, ensuring that the client has the necessary permissions to perform the requested action. The API server then processes the requests, modifying the state of the Kubernetes cluster as necessary.

The API server also interacts with the etcd key-value store to store and retrieve configuration data about the state of the cluster. This data includes information about the nodes in the cluster, the pods running on each node, and other Kubernetes objects such as services, deployments, and replicasets.

In addition to providing a central hub for managing the state of the Kubernetes cluster, the API server also plays an important role in ensuring the security of the cluster. It enforces authentication and authorization policies, ensuring that only authorized users have access to the Kubernetes API.

Overall, the API server is a critical component of the Kubernetes architecture, providing a central hub for managing and monitoring the state of the Kubernetes cluster, enforcing security policies, and exposing the Kubernetes API to clients.